The Importance of Manual Review

In the last decade, the fraud detection and identity verification space have rallied around usage of Machine Learning. One often overlooked component to make these models successful is a strong risk operations team. A strong risk operations team enables a financial institution to shift outward the risk frontier (more approvals and fewer losses) while maintaining a better customer experience. An institution with a strong manual review program can be much more proactive – identifying fraud when it first occurs as opposed to when losses accumulate and such losses make their way into future iterations of the model.

When a criminal identifies a weakness in a fraud system, they will typically exploit that weakness aggressively, sometimes increasing fraud rates from a baseline of 1% to as high as 90% of applications. If a model has never seen this MO before, it will be unable to differentiate fraudulent applications from legitimate ones – a risk analyst, however, can properly tag data and supply the data science team the necessary inputs to identify the impending attack. One example occurred recently – to defraud states of UI benefits, fraudsters started to use the victims’ addresses when opening DDA accounts. Historically, this behavior was non-fraudulent because a fraudster would need to physically access the financial product (typically a credit card) to perpetrate the fraud. Now, however, the fraudsters changed tactics to exploit the short window that money can be moved via ACH prior to the victim receiving a welcome kit in the mail. The major red flag was repeated use of a VOIP phone number – a model however could naively miss by over indexing on the value of the address match.

A proactive manual review team identified the commonality of state benefits being transferred into these accounts which did have address matches at account opening – subsequent investigation was more narrowed down and implemented faster since risk operations identified the key signals for the fraud detection. This had 2 benefits:

- The data science team knew exactly what type of signals to include in the model

- The data science team actually knew that there was a new fraud vector, and that they should ship a new model (one strong justification for a RO team is even knowing when to ship a new model).

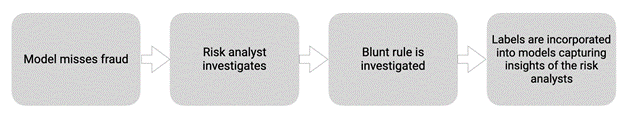

The work flow was:

One of the most impactful gains from a strong manual review team is improvements to credit models. One goal of a risk operations team is to classify all losses which are fraud losses as fraud instead of credit. In our experience, 10 to 20% of losses currently classified as credit losses are actually attributable to fraud. If these fraud losses are not filtered out when building a credit model, this can have a very significant impact on the credit model and even cause it to draw a completely backwards conclusion.

For example, suppose the typical FICO scores among applicants for a particular lender are 650-750. If there is an unidentified fraud ring where the identities used have 800ish credit scores, the credit model could actually learn that individuals with 800+ credit scores are worse credit risks since those data points in the training set default. As a result, a non-fraudulent applicant with an 800+ credit score might be rejected by the credit model, despite the fact that they may represent an unusually low credit risk.

More generally, given that fraudulent applications come from a very different (and highly non-stationary) distribution than non-fraudulent applications, they should be considered as outliers. Including outliers in the training data for a model can lead to significantly worse results.

Not all fraud is the same; consequently, not all treatment strategies are the same. If a financial institution only targets losses, they may find themselves with inadequate treatment strategies for specific MOs. At SentiLink, our risk operations team tags the following types of fraud along with several others:

- Synthetic fraud

- ID theft

- J1 / F1 visa fraud

- Credit washing

- Credit boosting

If an application is suspected of being synthetic, it would be appropriate to ask for eCBSV for certain users. If an application is suspected of being ID theft, it would instead be appropriate to ask for the applicant to upload a copy of their government issued ID. The only way to distinguish these types of fraud at the point of application is to have models that target each; the best way to target each separately, is to have a taxonomy and a risk operations team equipped to identify each issue separately.

Without a manual review team which tags each of these separately, a financial institution would be reduced to asking for all verification flows when something looks risky (additional friction) or no verification flows (additional losses).

By tagging each of these MOs individually, a data science team can build models detecting each separately to know when to only ask for eCBSV vs only when to ask for a government issued ID.

It is the manual review of the risk operations team that enables quick response to emerging fraud vectors, better credit modeling and optimal treatment strategies. While Machine Learning is the technology that powers most fraud systems, it is the manual review that is critical to developing best-in-class solutions.

| Tagged with: |

| Posted in: | AF Education |